Written by Alex Salvatore, Indie iOS Developer with multiple Gen

AI apps on the App Store. Last updated: February 2026

I turned a pile of unreadable OCR files into a commercial ebook in a

single afternoon. No editor. No team. Just Claude Code and a clear

goal.

The Starting Point:

Unusable Archive Files

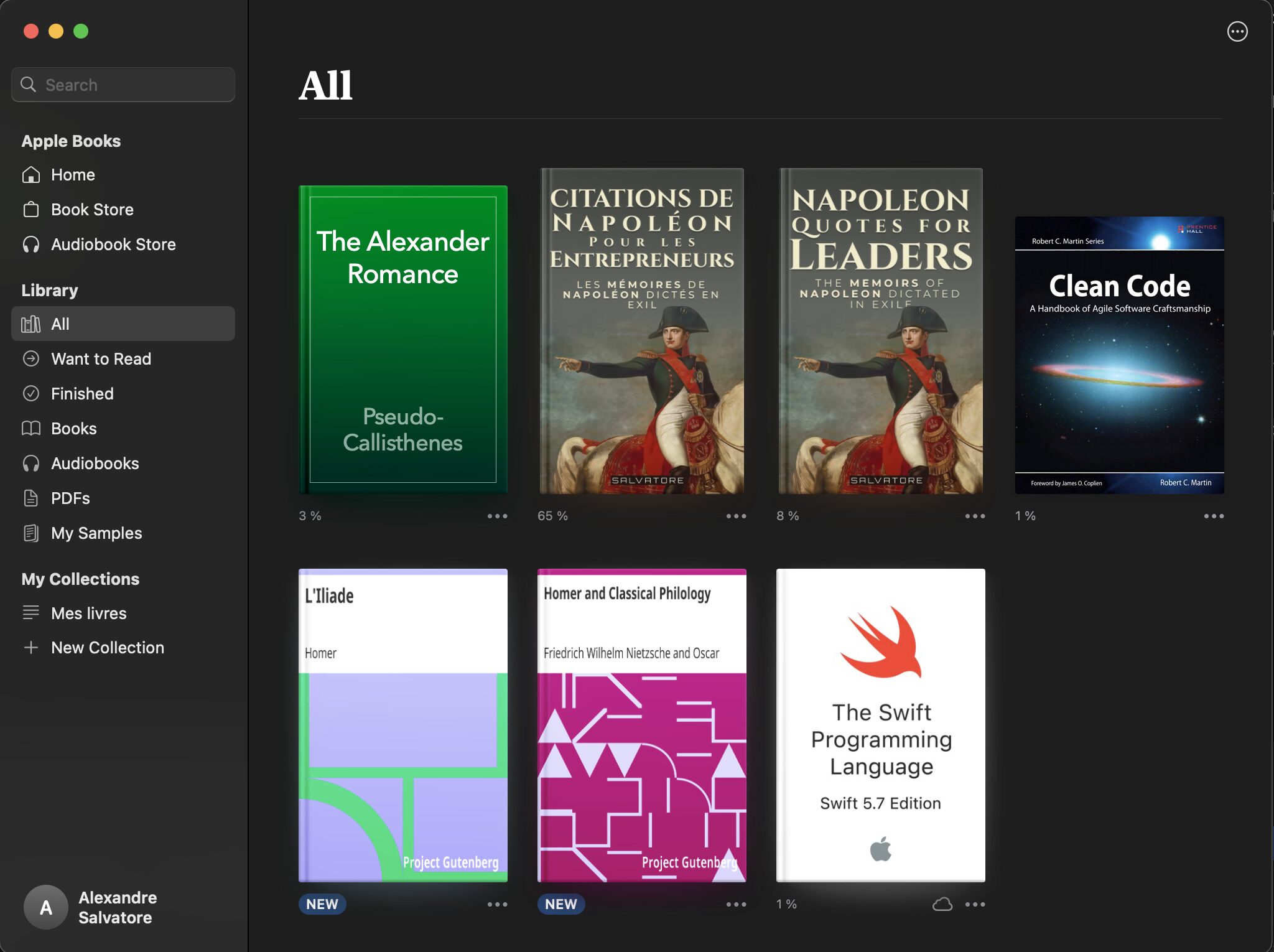

It all started with Ancient Life Coach, my iOS app that runs on

ancient texts. While building it, I accumulated dozens of books from

digital archive sites. Texts you can’t find in print anymore. Some only

in English, others in terrible condition.

These were .txt files generated by old OCR software. Riddled with

errors. Unreadable without serious editorial work.

I decided to turn them into ebooks. Not with a publishing house. Not

with a team of freelancers. With Claude Code.

The Complete 6-Step Workflow

Here’s exactly what Claude Code did, step by step, to go from raw OCR

chaos to a polished product ready for Amazon KDP.

| Step | Task | Time |

|---|---|---|

| 1. Inventory | Analyze all files, rank by commercial potential | Minutes |

| 2. Market Research | Generate keyword lists, estimate search volume | Minutes |

| 3. Editorial Restructuring | Split continuous text into Markdown chapters | Minutes |

| 4. Full Translation | Translate entire book preserving period tone | ~30 min |

| 5. Illustration | Suggest public domain paintings for each chapter | Minutes |

| 6. ePub Generation | Install Pandoc, configure metadata, build file | Minutes |

Step 1: The Inventory

I gave Claude Code the full list of my .txt files. Raw, uncleaned OCR

scans of 19th-century texts.

Its first mission: analyze each text and tell me which ones had the

best monetization potential.

Step 2: Market Research

Claude Code generated keyword lists I could paste directly into

Google AdWords. For each book, it provided an estimated search volume

and a ranking difficulty score.

The result? A list sorted by commercial potential. With suggested

titles and subtitles calibrated for the English-speaking market.

“Napoleon Quotes for Leaders.” “Memoirs of St. Helena, Annotated

Edition.”

The work of a publisher AND a marketer. In a few minutes.

Step 3: Editorial

Restructuring

Raw OCR files are continuous text. No chapters, no structure. Claude

Code split each book into separate Markdown files organized by chapter.

With titles, chapter numbers, and a proper section hierarchy.

This is the kind of tedious, painstaking work that normally takes

days of manual labor. Claude Code handled it as a routine operation.

Step 4: The Translation

This one impressed me the most. A complete translation of an entire

book. Thirty minutes. All while preserving the period tone, the

stylistic turns of phrase, and the historical references.

Not a word-for-word translation. An editorial translation. The kind

that captures intent and voice, not just meaning.

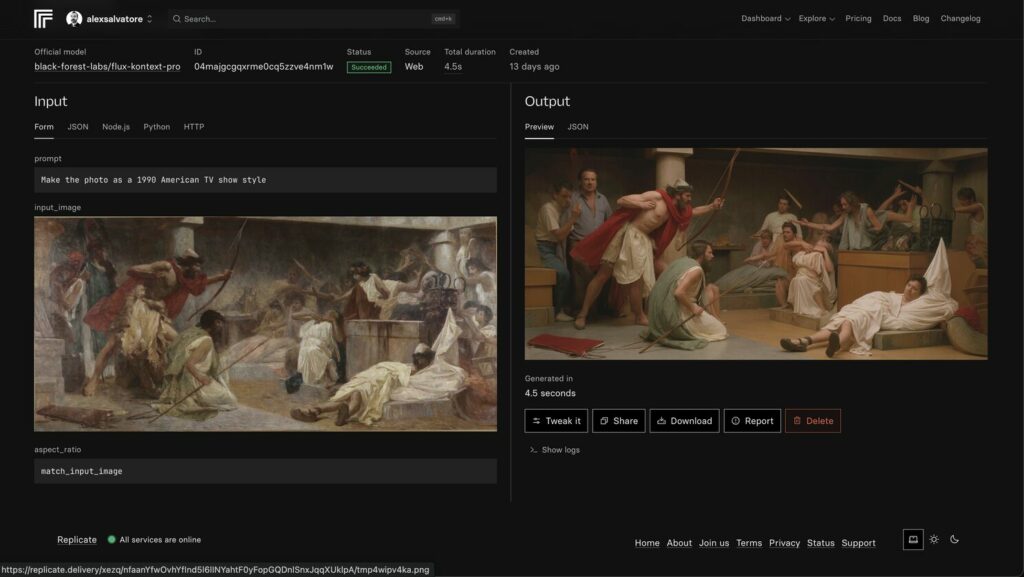

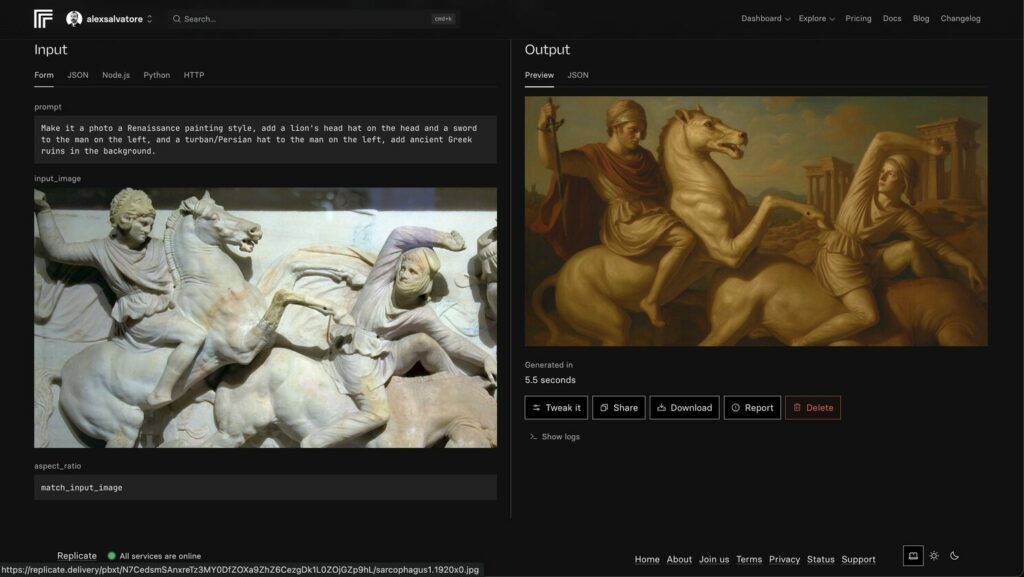

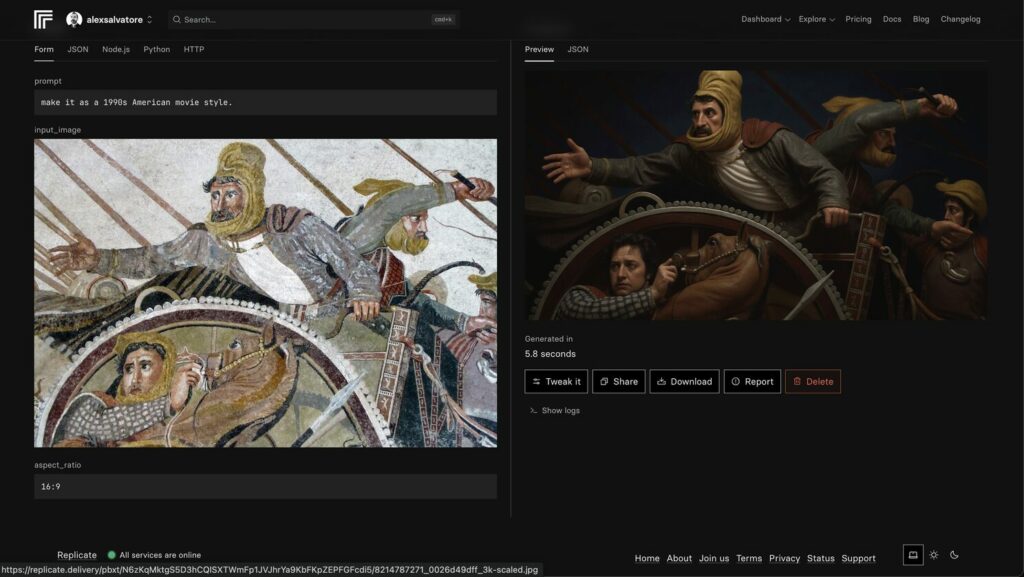

Step 5: Illustration

I asked Claude Code to suggest public domain paintings to illustrate

each chapter. It proposed period-appropriate artworks that matched the

content. Battle scenes for military chapters, portraits for personal

passages.

Step 6: ePub Generation

Claude Code installed the necessary library (Pandoc) on its own,

configured the metadata, and generated the final .ePub file. Ready to

upload to Amazon KDP.

No manual configuration. No fighting with formatting tools. Just a

clean, structured ebook file at the end of the pipeline.

The Concrete Result

The first ebook produced with this workflow is now available:

Napoleon Quotes for Leaders: The Memoirs of Napoleon Dictated in

Exile. A rare text, dictated by Napoleon himself on Saint Helena,

that I found in the archives of the Bibliothèque nationale de France.

Claude Code transformed it into a structured, translated, illustrated

ebook ready for sale.

What This Changes About

Publishing

A traditional editor would have charged thousands of euros for this

work. Market research, OCR cleanup, restructuring, translation, layout,

final file generation.

Claude Code did it in one afternoon. For the price of a

subscription.

The most striking part: it didn’t just execute tasks. It made

strategic editorial decisions. Which title would sell best. Which

illustrations would match the content. How to structure chapters for

e-reader consumption.

That’s the real potential of Claude Code. Not just writing code.

Orchestrating a complete project that blends market analysis, editorial

work, translation, and technical production.

Step-by-Step: How

to Replicate This Workflow

If you have old texts, scanned documents, or archive material you

want to turn into ebooks, here’s how to do it:

- Gather your raw files into a single folder. OCR

text files, PDFs, whatever you have. - Ask Claude Code to inventory them and rank by

commercial potential using keyword research. - Let it restructure the raw text into Markdown

chapters with proper headings. - Request a full translation if needed, specifying

you want editorial quality, not literal. - Generate the ePub using Pandoc with cover image and

metadata. - Upload to Amazon KDP and set your pricing.

The entire process can be done in a single session.

Frequently Asked Questions

Can Claude Code

really translate an entire book?

Yes. It translated a full-length 19th-century text in about thirty

minutes, maintaining the period tone and historical references. The

quality is editorial, not robotic. You still want to review the output,

but it’s remarkably close to what a professional human translator would

deliver.

What types of

texts work best for this workflow?

Public domain texts from digital archives are ideal. Historical

memoirs, philosophical works, classical literature. Anything where the

raw material exists but needs cleaning, restructuring, and modernizing

for today’s readers.

Do I need

technical skills to follow this process?

You need basic comfort with the command line to run Claude Code and

Pandoc. But Claude Code handles the heavy lifting: installing

dependencies, configuring files, generating output. If you can type a

prompt, you can produce an ebook.

How

much does this cost compared to traditional publishing?

A traditional publishing workflow for this kind of project (market

research, OCR cleanup, translation, layout, ePub generation) would cost

several thousand euros. With Claude Code, the total cost is your monthly

subscription. The ROI is immediate if your ebook generates any sales at

all.

Is the output

quality good enough for Amazon KDP?

The ePub file Claude Code generates passes Amazon’s quality checks.

Proper metadata, table of contents, chapter structure, cover image. You

can upload it directly to KDP without additional formatting tools.

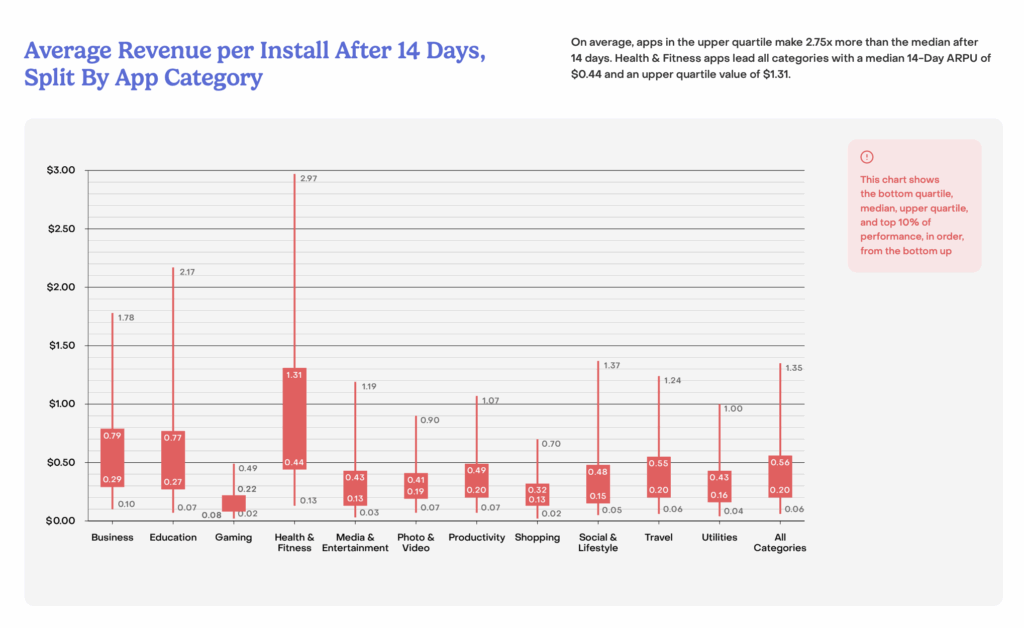

I’m an indie iOS developer building Gen AI apps for the App

Store. Try IndieScout

ASO to validate your app idea’s market fit before you

build.